PACE: marrying generalization in PArameter-efficient fine-tuning with Consistency rEgularization (NeurIPS 2024 Spotlight)

Yao Ni , Shan Zhang , Piotr Koniusz

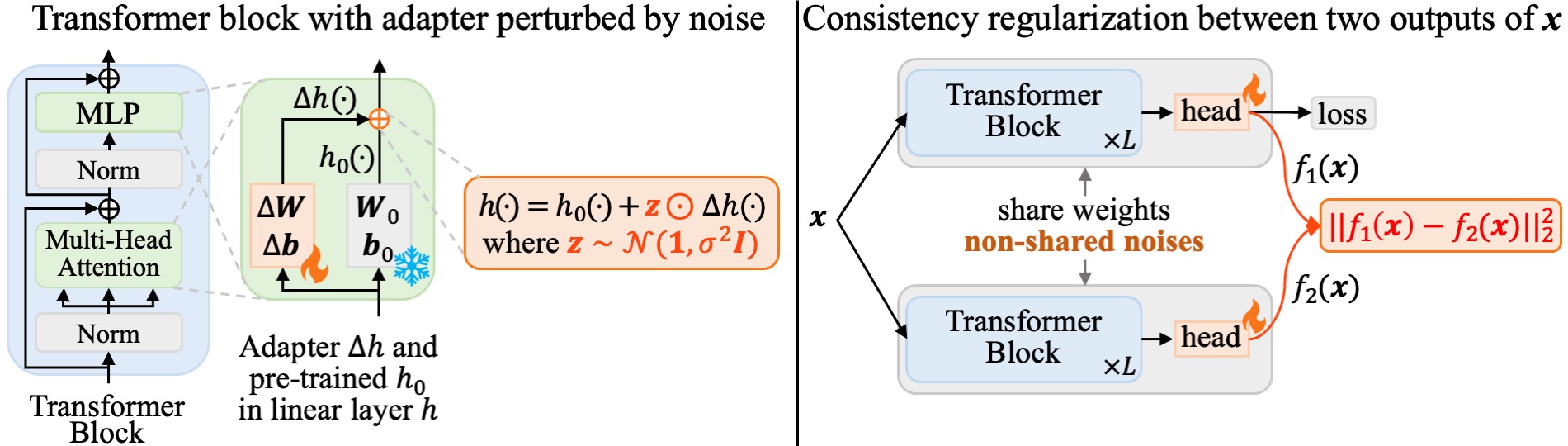

💡 Consistency regularization across different perturbations reduces gradient norms, improving generalization.

💡 Consistency regularization on adapter features aligns fine-tuned models with pre-trained ones, preserving knowledge.

Code for PACE-Vision is Released.

If you find the theories or code help your work, please kindly cite our paper:

@inproceedings{

ni2024pace,

title={{PACE}: marrying the generalization of {PA}rameter-efficient fine-tuning with Consistency rEgularization},

author={Yao Ni and Shan Zhang and Piotr Koniusz},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems},

year={2024},

url={https://openreview.net/forum?id=cOuLbPhOT1}

}